When you write code that interacts with the internet, you are entering an environment of unpredictability. Servers can be slow, networks can be unreliable, and responses can be delayed. The requests library is powerful, but by default, it will wait forever for a response. This makes the timeout parameter one of the most important tools for writing stable, production-ready applications.

The Danger of the Default: Why You Must Set a Timeout

Imagine your application makes an API call to an external service. If that service is down or experiencing issues, your requests.get() call will simply wait. And wait. And wait. Without a timeout, your script will be stuck on that single line of code, frozen indefinitely, unable to proceed, log an error, or retry. This is a fatal flaw for any serious application.

Mastering the timeout Parameter: A Practical Guide

Setting a timeout is incredibly simple. You pass the timeout argument with a value in seconds. There are two primary ways to do this.

1.The Simple Timeout

This is the most common method. You provide a single floating-point number that represents the total number of seconds to wait for the server to send data.

import requests

try:

# Wait a maximum of 5 seconds for the server to respond

response = requests.get('https://api.example.com/data', timeout=5)

print("Request was successful!")

except requests.exceptions.Timeout:

print("The request timed out.")2.The Granular Timeout (Connect vs. Read)

For more fine-grained control, you can provide a tuple of two floats. This allows you to set separate timeouts for connecting to the server and for reading the response.

timeout=(connect_timeout, read_timeout)

Connect Timeout: The number of seconds to wait for your client to establish a connection with the server.

Read Timeout: The number of seconds to wait for the server to send a response after the connection has been established.

This is useful for dealing with servers that might be quick to connect but slow to process your request and generate a response.

import requests

try:

# Wait 3.05 seconds to connect, and then 10 seconds for the server to send data

response = requests.get('https://api.example.com/slow_process', timeout=(3.05, 10))

print("Request was successful!")

except requests.exceptions.ConnectTimeout:

print("The connection timed out.")

except requests.exceptions.ReadTimeout:

print("The read operation timed out.")Production-Ready Code: Handling Timeout Exceptions

As seen in the examples above, a timeout isn’t a silent failure. When the deadline is reached, requests raises a requests.exceptions.Timeout error (or the more specific ConnectTimeout or ReadTimeout). To prevent this from crashing your application, you must wrap your request in a try...except block. This allows you to gracefully handle the error, perhaps by logging it, retrying the request after a delay, or simply moving on to the next task.

Real-World Application: Timeouts and Proxies in Web Scraping

The importance of timeouts is magnified in web scraping, especially when using proxies. When you are routing your requests through a large pool of proxies, it’s inevitable that some of those proxies will be slow, overloaded, or temporarily unresponsive. Waiting indefinitely for a bad proxy would destroy the efficiency of your scraper.

This is where an aggressive timeout strategy becomes essential for building a high-throughput data collection engine.

A Professional Workflow Example:

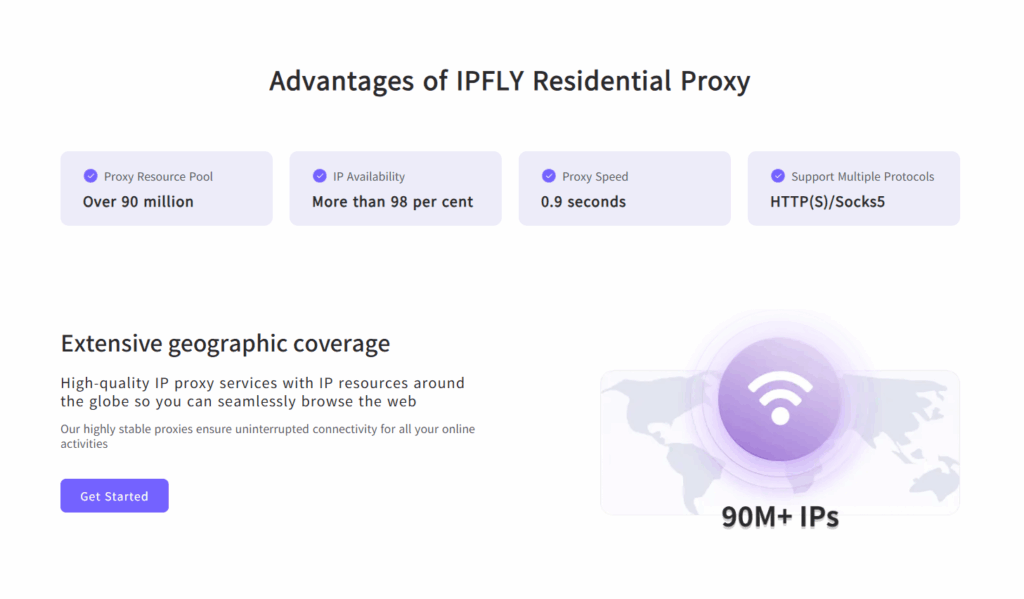

Imagine a developer is building a web scraper that uses IPFLY’s residential proxy network to gather data from an e-commerce site.

Their code would look something like this:

import requests

# A list of proxies obtained from the IPFLY dashboard

proxy_pool = [...]

for proxy in proxy_pool:

try:

# Set an aggressive timeout for each proxy attempt

response = requests.get(

'https://ecommerce-site.com/product/123',

proxies={"http": proxy, "https": proxy},

timeout=15

)

# If successful, process the data and break the loop

print(f"Success with proxy: {proxy}")

break

except requests.exceptions.Timeout:

# If this proxy fails, log it and the loop will try the next one

print(f"Proxy timed out: {proxy}. Trying next...")

continueIn this workflow, if a specific IPFLY proxy doesn’t respond within 15 seconds, the Timeout exception is caught, the script prints a message, and immediately retries the request with the next proxy in the pool. This ensures the scraper doesn’t waste time on unresponsive proxies and maintains a high rate of data collection.

The timeout parameter is a small piece of code with a massive impact on the stability and reliability of your application. The golden rule for any developer should be: never make a network request without a timeout. This best practice becomes absolutely critical when building robust systems that interact with external resources, whether they are third-party APIs or a dynamic, high-performance proxy network from a provider like IPFLY.